|

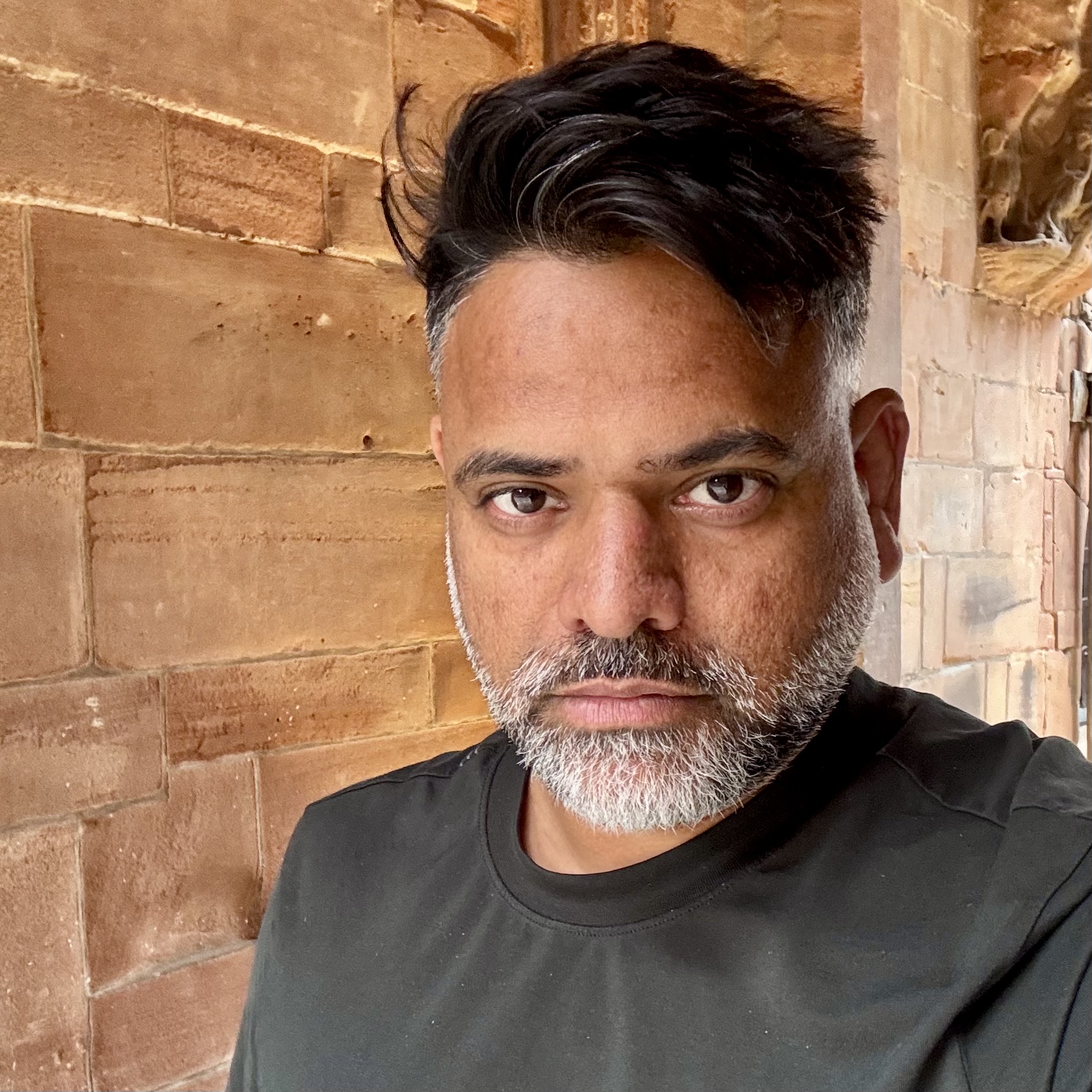

Ashutosh TrivediAssociate Professor of Computer Science

Email: ashutosh.trivedi @ colorado.edu |

Artificial intelligence is becoming part of everyday life, increasingly making decisions that affect our safety, rights, and opportunities. Think of a self-driving car deciding when to brake, a medical device choosing when to act, or software predicting whether someone might reoffend. These systems are powered by data: they learn from examples, patterns, or feedback to make decisions in real time. My research focuses on making these systems more trustworthy. That means developing tools and techniques to help engineers design AI systems that behave safely, fairly, and responsibly, even as they learn and adapt. Traditional software can be tested against fixed rules. But in AI systems, many rules are learned from data, making them harder to check, explain, or regulate.

I use ideas from formal methods—a branch of computer science that brings mathematical precision to system design—to bring clarity and accountability to AI. In contrast to industry efforts focused on building artificial general intelligence (AGI), my work emphasizes principled interaction with AI: designing ways for humans to communicate goals, constraints, and expectations in ways that are precise, transparent, and verifiable.

This includes the use of formal languages, automata, and logic to turn vague natural-language instructions into clear specifications, enabling collaborative programming with AI that is explainable and reliable. Recent projects include:

My goal is to bridge the gap between machine learning and responsible system design, helping AI systems earn the trust we increasingly place in them.

I am actively recruiting PhD students who are curious, rigorous, and excited about combining logic, learning, and impact. If you are interested in building principled, human-aligned AI systems, I encourage you to get in touch by email.